Unlock Seamless Access to Leading AI Tools and Technologies!

Gain access to a wide range of AI and machine learning tools, including ChatGPT, DALL-E, Gemini, Veo, Vision, DeepSeek, Claude, Mistral, Ollama, Grok, Perplexity, LLaMA, and many more. These advanced technologies provide powerful capabilities to enhance your projects, streamline processes, and drive innovation in various applications. Whether you're looking to generate text, create images, or explore complex data, these tools offer seamless integration to elevate your work.

Effortless access to ChatGPT, DALL-E, Gemini, Veo, Vision, DeepSeek, Claude, Mistral, Ollama, Grok, Perplexity, LLaMA, and numerous others.

ChatGPT: OpenAI's ChatGPT is a AI chatbot created by OpenAI, can engage in human-like conversations, answer questions, and generate various types of written content. It can also analyze uploaded images, provide creative ideas, and even translate languages or generate code.

DALL-E: OpenAI's DALL-E 3 is an image generation model that can create a wide variety of images based on text prompts, including detailed and intricate images with text, landscape and portrait images, and different artistic styles. It can also generate image variations and edit existing images, allowing users to modify or expand upon them.

Gemini: Google's Gemini is a versatile AI assistant from Google that can help with various tasks, including answering questions, generating content, and integrating with other Google apps and services. It can also connect to apps like Gmail, Google Calendar, and Google Maps, allowing you to manage your daily tasks without switching between apps. Additionally, Gemini can analyze data, generate images, and even help with writing and brainstorming ideas.

Veo: Google's Veo generates realistic and high quality videos from natural language text and image prompts, including images of people of all ages. Veo may provide you an error that indicates that your Google Cloud project needs to be approved for person or child generation, depending on the context of your text or image prompt.

Vision: Google Cloud’s Vision AI suite of tools combines computer vision with other technologies to understand and analyze video and easily integrate vision detection features within applications, including image labeling, face and landmark detection, optical character recognition (OCR), and tagging of explicit content.

DeepSeek: DeepSeek is a powerful AI model, especially known for its reasoning capabilities and ability to perform complex tasks. It can analyze large datasets, generate coherent and contextually accurate responses to complex prompts, and even solve mathematical problems using reasoning and substitution. DeepSeek is also used for writing, summarizing, and providing detailed explanations, and it supports multiple languages.

Claude: Anthropic's Claude is an AI assistant that can generate text, understand and respond to prompts, and process information from various sources. It can be used for tasks like summarizing documents, writing creative content, translating languages, and even generating code.

Mistral AI: Mistral AI's models, particularly Mistral Large, can generate text, assist with coding, handle complex reasoning, and even perform OCR (Optical Character Recognition) on documents, including those with images and mathematical expressions. They can also be used for tasks like chatbot development, summarization, and content creation.

Ollama: Ollama is a tool that simplifies the process of running open-source Large Language Models (LLMs) directly on your computer. It acts as a local model manager and runtime, allowing you to download, manage, and interact with models without needing cloud-based APIs. Essentially, it lets you run LLMs locally, giving you more control and reducing reliance on third-party services.

Grok: Grok is a conversational AI assistant and chatbot developed by Elon Musk's artificial intelligence company xAI.

Perplexity AI: Perplexity AI is an AI-powered search engine that provides real-time, concise answers to questions, often with citations to reliable sources. It's designed to be conversational and understand context, offering follow-up questions and summarizing information. Perplexity can be used for various tasks like answering questions, summarizing articles, generating code snippets, and even creating images.

LLaMA (Meta AI): Meta's Llama 3.1, a language model from Meta AI, is designed for a broad range of natural language processing tasks. It excels at text-based applications, offering features like multilingual support, real-time inference, and integration with external tools and APIs. It's also designed to reduce "hallucinations" (generating incorrect information) and to be steerable, allowing developers to control its behavior.

Looking for Smart Solution for your Business, Contact Us

AI vs machine learning vs LLM

In the rapidly evolving world of technology, terms like Artificial Intelligence (AI), Machine Learning (ML), and Large Language Models (LLMs) are often used interchangeably, yet they represent distinct yet interconnected concepts. Imagine standing at the crossroads of innovation, where each path represents a different technological approach that's reshaping how we interact with digital systems?

The digital landscape is no longer just about processing data—it's about understanding, learning, and adapting. From smartphones that predict our next text to recommendation systems that seem to know us better than we know ourselves, these technologies are quietly revolutionizing every aspect of our lives. But what exactly sets AI, Machine Learning, and Large Language Models apart? How do they work, and why should you care?

What is artificial intelligence?

Artificial intelligence is a broad field, which refers to the use of technologies to build machines and computers that have the ability to mimic cognitive functions associated with human intelligence, such as being able to see, understand, and respond to spoken or written language, analyze data, make recommendations, and more. Although artificial intelligence is often thought of as a system in itself, it is a set of technologies implemented in a system to enable it to reason, learn, and act to solve a complex problem.

What is machine learning?

Machine learning is a subset of artificial intelligence that automatically enables a machine or system to learn and improve from experience. Instead of explicit programming, machine learning uses algorithms to analyze large amounts of data, learn from the insights, and then make informed decisions. Machine learning algorithms improve performance over time as they are trained—exposed to more data. Machine learning models are the output, or what the program learns from running an algorithm on training data. The more data used, the better the model will get.

What are large language models?

Large Language Models (LLMs) represent a cutting-edge application of Machine Learning, specifically in the domain of natural language processing. These are advanced AI models trained on massive amounts of text data, enabling them to understand, generate, and manipulate human language with remarkable sophistication.

Key differences between AI, ML, and LLMs

Functional differences

While AI, Machine Learning, and Large Language Models are interconnected, they differ significantly in their scope, functionality, and application. Artificial Intelligence is the broadest concept, representing the entire field of creating intelligent machines. Machine Learning is a specific approach within AI that focuses on learning from data, while LLMs are a specialized type of Machine Learning model dedicated to understanding and generating human language.

Benefits of using AI, ML and LLMs together

AI, ML and LLMs bring powerful benefits to organizations of all shapes and sizes, with new possibilities constantly emerging. In particular, as the amount of data grows in size and complexity, automated and intelligent systems are becoming vital to helping companies automate tasks, unlock value, and generate actionable insights to achieve better outcomes.

Industries benefiting from AI, ML and LLMs to name a few.

Healthcare and life sciences Patient health record analysis and insights, outcome forecasting and modeling, accelerated drug development, augmented diagnostics, patient monitoring, and information extraction from clinical notes.

Manufacturing Production machine monitoring, predictive maintenance, IoT analytics, and operational efficiency.

Ecommerce and retail Inventory and supply chain optimization, demand forecasting, visual search, personalized offers and experiences, and recommendation engines.

Financial services Risk assessment and analysis, fraud detection, automated trading, and service processing optimization.

Telecommunications Intelligent networks and network optimization, predictive maintenance, business process automation, upgrade planning, and capacity forecasting.

Adding a Local LLMs are ideal for businesses prioritizing data privacy, security, and control over their AI systems. They provide fast, reliable, and cost-efficient solutions with complete autonomy.

Cloud-based LLMs are great for businesses looking for easy-to-use, scalable solutions that don’t require a large upfront investment. However, they come with concerns like higher recurring costs, reliability on third-party servers, and potential latency issues.

Hybrid models offer a compromise, allowing businesses to balance local control with cloud scalability. They are more flexible but have higher ongoing costs and can still experience latency when relying on cloud resources.

Ultimately, choosing the right model depends on your specific business needs. Are you more focused on security and privacy? A local LLM model might be your best bet. Do you need scalability without worrying about hardware? A cloud-based LLM might work better. Or perhaps you need a mix of both — hybrid models can provide a flexible solution.

Looking for Smart Solution for your Business, Contact Us

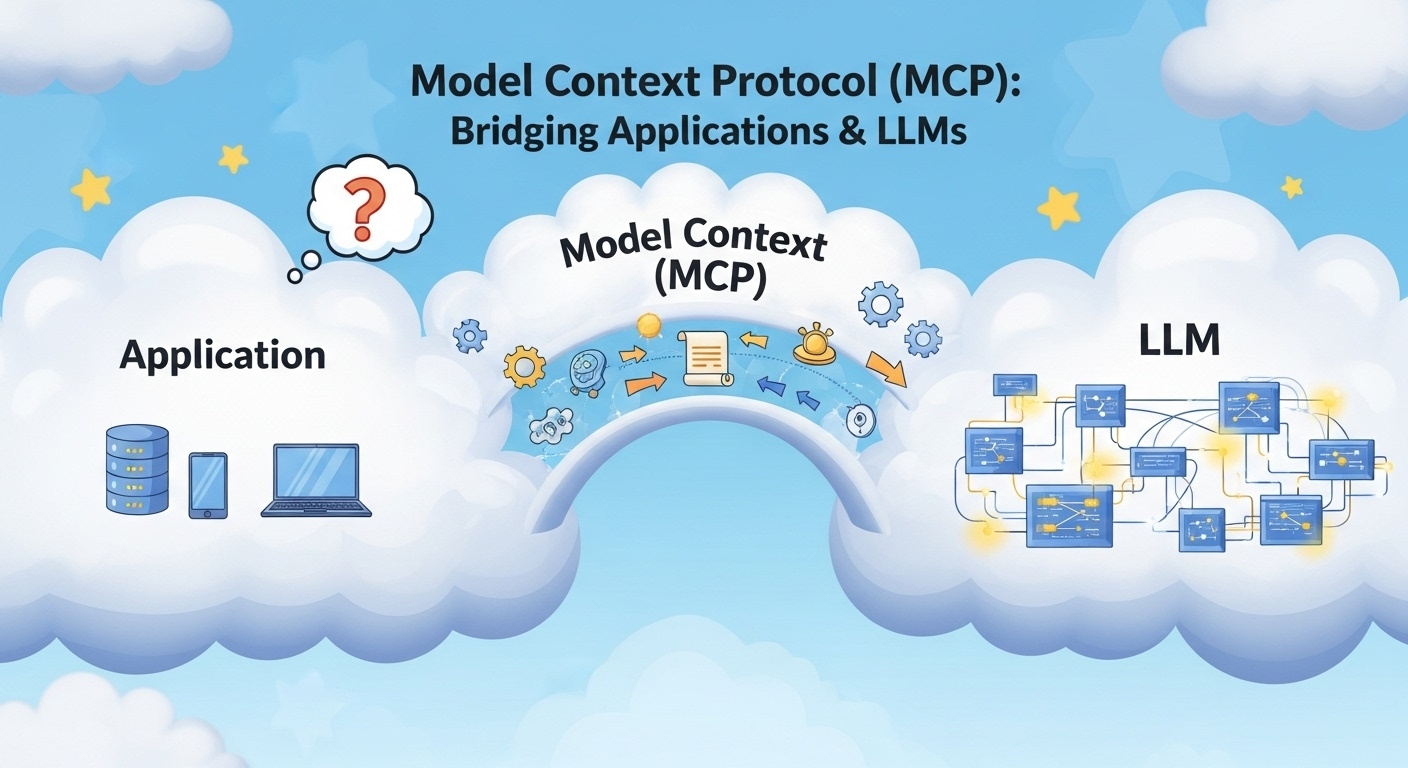

Unlocking Efficiency and Collaboration: The Benefits of the Model Context Protocol (MCP).

What is MCP?

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

Why MCP?

MCP helps you build agents and complex workflows on top of LLMs. LLMs frequently need to integrate with data and tools, and MCP provides:

* A growing list of pre-built integrations that your LLM can directly plug into.

* The flexibility to switch between LLM providers and vendors.

* Best practices for securing your data within your infrastructure.

MCP Benefits

The Model Context Protocol (MCP) offers significant benefits to customers when used alongside Large Language Models (LLMs), enhancing both usability and effectiveness. One of the primary advantages of MCP is its ability to streamline communication between users and LLMs by contextualizing queries. By framing requests within specific contexts, customers can receive responses that are not only more relevant but also tailored to their unique needs. This reduces the time spent sifting through irrelevant information and allows for faster decision-making and problem-solving.

Furthermore, MCP enhances the adaptability of LLMs in various applications, making them more versatile tools for customers. With the protocol's emphasis on context, LLMs can better understand industry-specific jargon, user preferences, and situational nuances. This leads to more accurate outputs and a higher overall satisfaction rate. For businesses, this means that LLMs can be deployed in a wider range of scenarios—from customer support to content creation—thereby increasing the return on investment.

The integration of MCP also fosters a collaborative environment where multiple stakeholders can participate in the interaction with LLMs. This is particularly beneficial in team settings where diverse perspectives can enrich the contextual input provided to the model. By allowing for this collective customization, MCP ensures that different viewpoints are considered, resulting in outputs that are not only comprehensive but also aligned with the collective goals of the team. Ultimately, by harnessing the Model Context Protocol alongside LLMs, customers can improve their operational efficiencies and drive innovative outcomes.

Use Case Example: Customer Support Automation

Implementation with MCP:

1.) Contextual Inputs: When a customer submits a query about a software bug, the MCP helps set the context for the LLM. Instead of processing the query in isolation, MCP accesses relevant historical data about the customer, such as previous support tickets, subscription level, and specific software versions they are using.

2.) Tailor Responses: Understanding the context allows the LLM to generate more specific and actionable responses. For example, if the customer is facing an issue with the latest software update, the LLM can reference pertinent troubleshooting steps tailored to that specific update, rather than giving generic answers.

3.) Collaborative Input: TechSolutions has a support team that includes specialists in various areas (e.g., technical support, billing, product features). Using MCP, team members can input context-specific keywords related to their expertise into the inquiry process. When a customer raises a billing question coupled with a technical issue, the LLM can pull insights from both domains, creating a comprehensive response that addresses both concerns.

Outcome:

With MCP, TechSolutions experiences notable improvements:

1.) Increased Efficiency: The average response time to customer inquiries decreases as the LLM provides precise responses based on contextual data.

2.) Higher Customer Satisfaction: Customers report feeling better understood and valued due to the tailored answers they receive, leading to improved Net Promoter Scores (NPS).

3.) Reduced Support Load: The number of escalated tickets to human agents drops, allowing the support team to focus on more complex issues while the LLM handles routine queries effectively.

In summary, the integration of Model Context Protocol (MCP) not only enhances the efficiency and accuracy of responses generated by LLMs but also enables a cohesive and comprehensive approach to handling customer inquiries. Through contextual understanding, TechSolutions is able to deliver exceptional customer support, showcasing the profound benefits of MCP in real-world applications.